Intel, AMD, and Google Ride the Wave of AI Acceleration

The latest advancements in AI hardware, ranging from enterprise solutions to cloud-based accelerators, are empowering developers to create more sophisticated and larger AI models than ever before.

Major technology firms are increasingly adopting specialized AI accelerators to meet the growing demands of AI workloads across data centers and edge computing. While AI deployment can be facilitated by standard processors, the use of dedicated hardware provides the scalability and enhanced performance needed to engineer cutting-edge AI models.

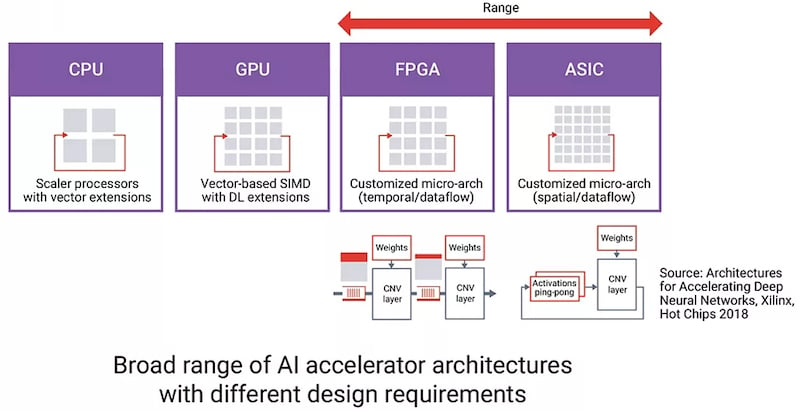

AI accelerators are available in many different architectures and provide parallel processing to accelerate the training, testing, and deployment of complex models.

Similarly to the way application-specific integrated circuits (ASICs) boost performance for compute-intensive activities such as Bitcoin mining, numerous corporations, such as AWS and Microsoft, have shifted towards tailor-made silicon to handle the hefty requirements of industrial-strength AI workloads. In this discussion, we'll delve into the specifics of three fresh AI accelerators from industry giants Intel, AMD, and Google. We'll explore the distinctive features of each accelerator and discuss how this move towards AI-dedicated silicon potentially signals the dawn of a new era in computational intelligence.

Intel Hones In on Integrated AI Acceleration at the Core Level

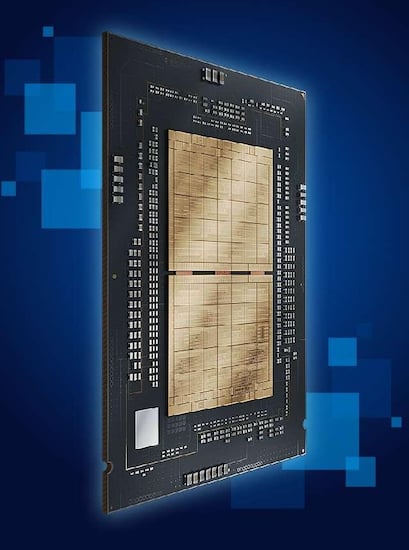

Kicking off the lineup, Intel has unveiled its latest Xeon processors, dubbed Emerald Rapids, which incorporate AI acceleration capabilities into each individual core. As a result of this integration, Intel reports that its 5th-generation Xeon processors deliver an average performance increase of 21% and a surge of up to 42% in AI inference workload efficiency compared to rival offerings. Furthermore, Intel asserts that its latest generation slashes total cost of ownership by a substantial 77% when adopting a five-year replacement cycle. This positions the Emerald Rapids as an attractive option for system architects seeking to enhance AI or High-Performance Computing and Communications (HPCC) performance in anticipation of future demanding workloads.

5th-gen Intel Xeon processors show improved general compute and AI-focused performance, giving new capabilities to edge and data center devices.

The Xeon processor is not an isolated AI accelerator, but it is crafted to handle "intensive AI workloads end-to-end before there's a necessity to incorporate separate accelerators." This enhancement in AI capabilities at the core level aligns with Intel's "AI Everywhere" initiative, as these processors are suitable for deployment in both data center environments and edge computing devices. Designers can thus take advantage of the upgraded AI efficiency while still operating within a well-known system architecture.Consequently, designers who require additional performance but do not need the bespoke optimization that dedicated accelerators provide will find Intel's latest processors to be highly beneficial. This solution bridges the gap between standard processing and specialized AI acceleration, offering a pragmatic performance boost for a wide array of AI applications.

AMD Releases Discrete Accelerators

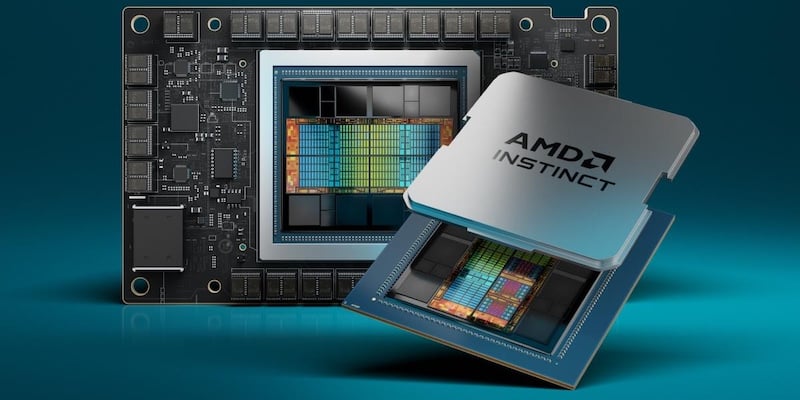

On the discrete side of AI hardware, AMD also recently announced the MI300 series of accelerators, bringing new improvements in memory bandwidth for data-heavy applications such as generative AI or large language models. The two new products, the MI300X and MI300A, each target unique applications that demand high levels of memory performance.

According to AMD, the MI300X accelerators offer 40% more compute units and up to 1.7x the memory bandwidth than previous generations, accelerating more complex and data-heavy applications. The MI300A accelerated processing unit (APU), on the other hand, delivers a combination of processing performance on AMD’s Zen 4 core architecture and HPC/AI performance.

AMD AI accelerators provide designers with the memory capacity and bandwidth required for complex, high-level AI models. I

Companies such as Microsoft, Dell, and HPE have already revealed AI-accelerated devices using the MI300 series, highlighting the growing need for dedicated AI accelerators.

Google Ups Cloud-Based AI Acceleration

For designers looking to leverage the benefits of AI acceleration without building a device from scratch, Google has recently shown off its latest AI model using custom Tensor Processing Units (TPUs). While Google TPUs are not commercially available, they address a key market for cloud-based AI solutions.

Google TPUs in a data center provide

software designers access to accelerated AI capabilities, enabling

faster innovation and improved performance.

Although system architects certainly benefit from custom AI solutions, not all designers need commercial products to accelerate their work. As such, software-focused designers can simply leverage Google's acceleration to test, train, and deploy models virtually.

As a result, hardware and software designers alike can benefit from AI-focused innovation.

Setting New Limits

Despite the fact that each technology listed here targets a different market segment, each one illustrates the growing need for AI acceleration and AI-focused hardware. Especially as AI is considered a solution for more complex problems outside the engineering world, a shift toward dedicated acceleration hardware may be necessary to support the number of calculations required to train larger models.